Your java app server such as Tomcat, JBoss, Websphere and Glassfish are multithreaded — meaning if a user opens a web page (HTTP request submitted), the app server will try to find a free unused thread, and allocate the job of serving the request to it.

One common problem which I recently come accross is when the user performs a long-running task such as reporting or data transformation. If you just simply allow a user to execute a long-running task, your app server is vulnerable to thread starvation — that is genuine user load, or even hackers can issue a massive amount of request causing your server to run out of free threads — hence denying application availability.

One approach is to implement a resource bounding pattern such as thread pooling. The analogy of this pattern is similar like a chef in a restaurant. Say if there is 2 chefs on a restaurant, and each one of them needs 10 minutes to cook an order. Each next free chef will take the order in the order that it arrives, and if all two of them are busy, subsequent order will be queued .

To illustrate this with an example, following is a class that represent a long-running task. Here the task is simply performing 10 seconds delay to simulate the long-run. A log message with task id and the name of the thread running the task is printed when the task begins and ends.

[sourcecode language="java"]

public class HeavyTask implements Runnable {

private static Logger logger = LoggerFactory.getLogger(HeavyTask.class);

private int taskId;

public HeavyTask(int taskId) {

this.taskId = taskId;

}

public void run() {

logger.info(String.format("Task #%s is starting on thread %s…", taskId, Thread.currentThread().getName()));

try {

Thread.sleep(10 * 1000);

} catch (InterruptedException e) {

logger.error(String.format("Task #%s running on thread %s is interrupted", taskId, Thread.currentThread().getName()), e);

}

logger.info(String.format("Task #%s on thread %s finished", taskId, Thread.currentThread().getName()));

}

}

[/sourcecode]

Secondly we have the HeavyTaskRunner class which is a service class that implements business functionality. This class also instantiates the thread pool using Java ExecutorService API with 2 maximum threads by using Executors.newFixedThreadPools() factory method.

[sourcecode language="java"]

public class HeavyTaskRunner {

private ExecutorService executorService;

private static final int NUM_THREADS = 2;

private int taskCounter = 0;

public HeavyTaskRunner() {

executorService = Executors.newFixedThreadPool(NUM_THREADS);

}

/**

* Create a new { HeavyTask} and submit it to thread pool for execution

*/

public int runTask() {

int nextTaskId;

synchronized(this) {

nextTaskId = taskCounter++;

}

executorService.submit(new HeavyTask(nextTaskId));

return nextTaskId;

}

}

[/sourcecode]

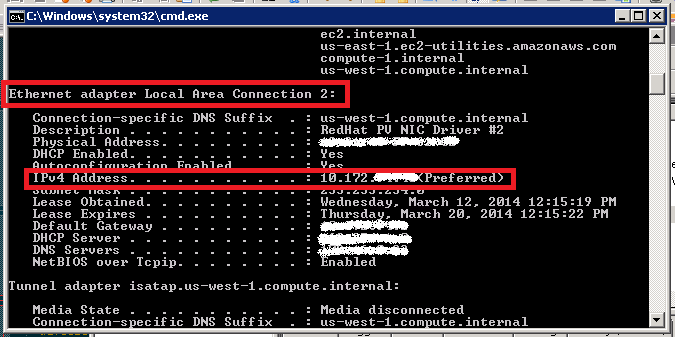

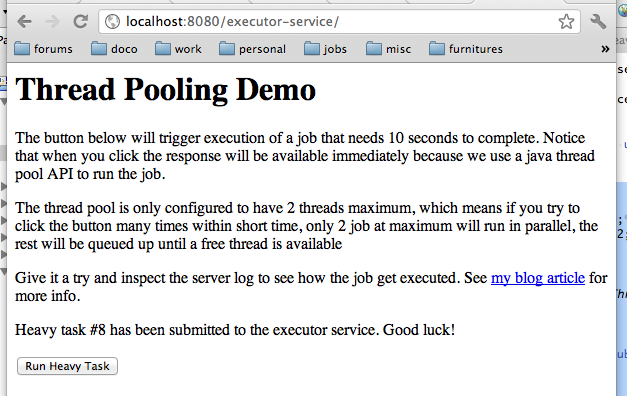

And finally I wrapped this in a nice simple Spring MVC web application. You can download the source code from my google code site and run embedded tomcat container using mvn tomcat:run command.

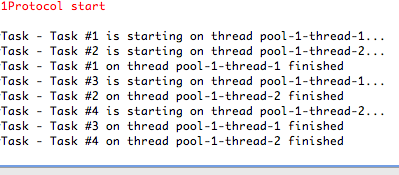

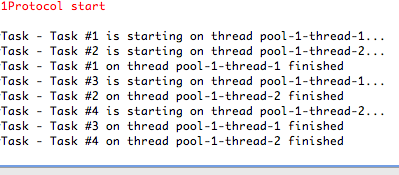

If you look at the server log, this is what it prints after 4 tasks were run in less than 10 seconds. Notice that not more than 2 tasks ever run simultaneously. If all threads are busy the task will be queued until one become free:

This techniques is ofcourse not without downside. Although we have capped the thread (an memory) resource into certain limit, the queue can still grow infinitely, hence our application is still vulnerable to denial of service attack (if a hacker submitted long running tasks for a thousand time, the queue will grow very big causing service unavailability to genuine users)

The concurrency chapter of Java SE tutorial is a good reference if you want to dig more into other techniques of managing threads and long running tasks.

Demo Application Source Code

Source code for the demo application above is available for browsing / checkout via Google Code hosting:

Ensure you have latest version of JDK and Maven installed. You can run the application using embedded tomcat with mvn tomcat:run command from your shell.